Machine learning is the science and art of extracting useful information from large and complex datasets. It draws from powerful tools in statistical inference, function approximation, dynamical systems and parallel and distributed computing. It also attracts significant interest from information processing in the biological brain and perceptual systems.

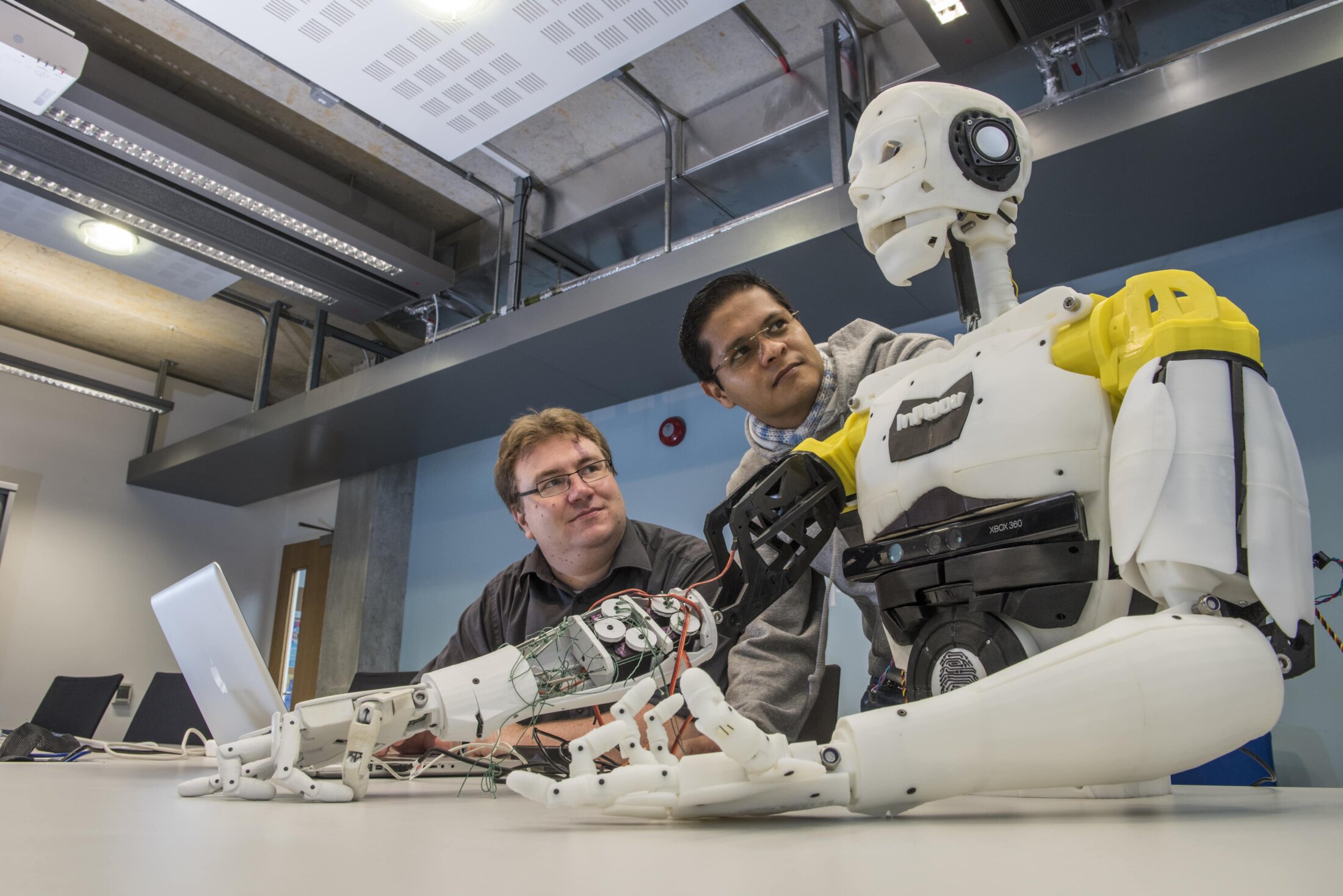

We are committed to developing the next generation of researchers by creating a community of PhD students and postdocs unafraid of tackling difficult research problems. We recognise that academia and particular machine learning can often be highly competitive with successes sometimes being rare and short lived. We counter this by celebrating all those brave enough to tackle original and important problems and we focus on collaboration rather than competition. We foster cooperation between students and encourage exchange of knowledge and ideas through joint research, reading groups, coffee mornings and frequent informal gatherings.

We have a huge range of diverse compute resources for machine learning research, including a vast array of GPU compute resources. Last year the School invested in further increasing our high-memory GPU compute with the addition of the six-server Alpha cluster with a total of 24 48GB GPUs and terabytes of local storage.

My research aims to understand how machine learning can drive sensor technology to build the next generation of ubiquitous monitoring systems for healthcare. Together with my research team, we are looking at a few projects related to digital epidemics. The first is related to digital contact tracing via Bluetooth technology. We also have a large Covid-19 dataset of chest x-rays and are evaluating a cascaded transfer learning approach, which has already been demonstrated as state-of-the-art on human activity recognition from sensor data. We are constantly exploring new collaboration directions, including projects related to x-ray computed tomography, post-operative recovery of cancer patients, and novel approaches to sense biosignals.

I’m fascinated by representation. Learned representations of data and intrinsic representations within models, are central to modern machine learning. We can build differentiable learning machines, but what do they learn? How robust are they? What architectural choices, constraints, inductive biases, and biases in the data, influence the representations a machine learns? How do we understand or interpret these representations? Do certain biases lead to representations that correlate with features observed in biological brains, or with psychophysical notions of perception? Together with my team, my research looks at these questions from a myriad of directions. We’re developing underlying mathematical theories, as well as performing large scale empirical studies looking at everything from categorising behaviour of individual neurons within a machine, through to the analysis of emergent communication between multiple machines.